|

Home

Dataset/Download

Experiments

About

Contact:

SOCIA Lab. - Soft Computing and

Image Analysis Group

Department

of Computer Science, University of Beira Interior,

6201-001 Covilhã, Portugal

hugomcp@di.ubi.pt

s

|

P-DESTRE

Fully Annotated

Datasets for Pedestrian Detection, Tracking, Re-Identification and Search from Aerial

Devices

Experiments

Here we describe the empirical evaluation protocol

that should be used to provide comparable results in

the P-DESTRE dataset. In

our experiments, to establish a baseline for each

task, 10/5-fold cross-validation schemes were

considered, with data randomly divided into 60%

for learning, 20% for validation and 20% for

testing.

Each

data split used for the: 1) pedestrian detection;

2) pedestrian tracking; 3) pedestrian

re-identification and 4) pedestrian search is

provided below.

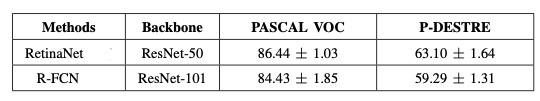

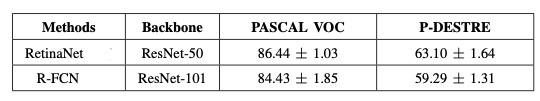

Task 1: Pedestrian

Detection

- 10-fold

learning/validation/test splits are available [here]

- Baseline

pedestrian detection scores for RetinaNet

[T1-1] and RFCN

[T1-2] methods, on the PASCAL VOC

2007/2012 and P-DESTRE datasets is

available [here]

The Average Precision values (at Intersection of

Union values equal to 0.5) - AP@IoU=0.5

obtained by both methods are provided in the Table

below. The mean +/- the standard deviation values

are provided.

[T1-1]

T-Y Lin, P. Goyal, R. Girshick, K. He and P. Dollar.

Focal Loss for Dense Object Detection IEEE

Transactions on Pattern Analysis and Machine

Intelligence, 42(2), pag. 318–327, 2020.

[T1-2]

J. Dai, Y. Li, K. He and J. Sun. R-FCN: Object

detection via region- based fully convolutional

networks. In proceedings of the International

Conference on Neural Information Processing Systems,

pag. 379–387, 2016.

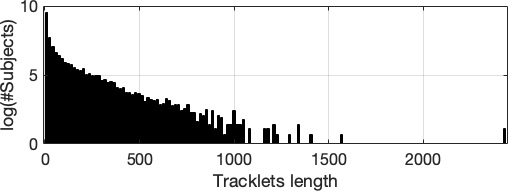

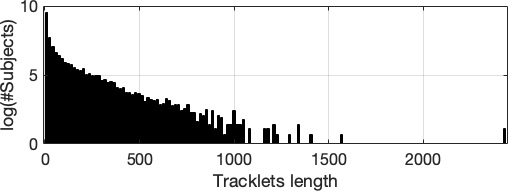

Task 2: Pedestrian

Tracking

The sequences of each ID (tracklets) in the P-DESTRE

set have different length, ranging from 4 to 2,467

(average value 63.7 +/- 138.8). The full statistic

of the tracklets length is as follows:

- 10-fold

learning/validation/test splits are available [here]

- Baseline

pedestrian tracking scores for TracktorCv

[T2-1] and V-IOU

[T2-2] methods, on the MOT-17 and P-DESTRE

datasets are available [here]

- Hyper-parameters

specification of both methods is available [here]

The results attained by both algorithms and

datasets are listed in the Table below, provided

in terms of MOTA, MOTP, F-1 performance

measures:

[T2-1] P. Bergmann, T. Meinhardt and L.

Leal-Taixe. Tracking without bells and whistles.

ArXiv, https://arxiv.org/abs/1903.05625v3, 2019.

[T2-2] E. Bochinski, T. Senst and T.

Sikora. Extending IOU based multi-object

tracking by visual information. in Proceedings

of the IEEE International Conference on Advanced

Video and Signal Based Surveillance, doi: 10.

1109/AVSS.2018.8639144, 2018.

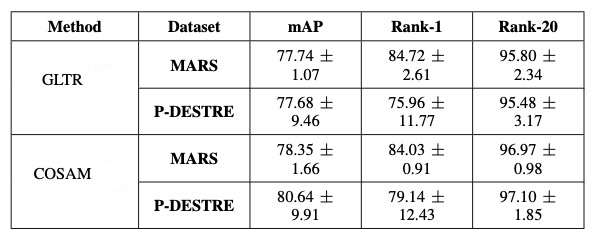

Task 3: Pedestrian

Re-Identification

- 5-fold

learning/gallery/query splits are available

[here]

- Baseline

pedestrian re-identification CMC scores for

GLTR

[T3-1] and COSAM

[T3-2] methods, on the MARS and P-DESTRE

datasets are available [here]

The

results attained by both algorithms and

datasets are listed in the Table below,

provided in terms of mean average

precision(mAP) and cumulative rank-1 and 20

values:

[T3-1]

J. Li, J. Wang1, Q. Tian, W. Gao and S.

Zhang. Global-Local Temporal

Representations For Video Person

Re-Identification ArXiv, https://arxiv.

org/abs/1908.10049v1, 2019.

[T3-2] A. Subramaniam, A. Nambiar

and A. Mittal. Co-segmentation Inspired

Attention Networks for Video-based Person

Re-identification. In proceedings of the

International Conference on Computer

Vision, pag. 562- 572, 2019.

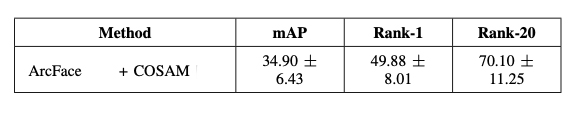

Task 4: Pedestrian

Search

- 5-fold

learning/gallery/query splits are available [here]

- Baseline

pedestrian search CMC scores (obtained by

fusing ArcFace [T4-1]

and

COSAM [T4-2]

scores)

on the P-DESTRE dataset are

available [here]

The

results attained are listed in the Table below,

provided in terms of mean average

precision(mAP), and cumulative rank-1 and 20

values:

[T4-1]

J. Deng, J. Guo, N. Xue and S.

Zafeiriou. Arcface: Additive angular

margin loss for deep face recognition.

In proceedings of the IEEE Computer

Society Conference on Computer Vision

and Pattern Recognition,doi:

10.1109/CVPR.2019.00482, 2019

[T4-2] A.

Subramaniam, A. Nambiar and A.

Mittal. Co-segmentation

Inspired Attention Networks

for Video-based Person

Re-identification. In

proceedings of the

International Conference on

Computer Vision, pag. 562-

572, 2019.

|

|

|

|